You’re sitting there, watching your server metrics climb. CPU hitting 80%. Memory creeping up. Users complaining about slow load times.

Time to scale. But which way?

Ugh.

Here’s the thing: Most tutorials make this way more complicated than it needs to be. Let me break it down in plain English, with real examples from our app.

—

What The Heck Are We Even Talking About?

Vertical Scaling (Scale Up)

Think of it like this: You’ve got one server doing all the work. You make that server bigger, faster, stronger. More CPU cores. More RAM. Better storage.

It’s like upgrading your laptop from a 8GB → 32GB. Same machine. More muscle.

Horizontal Scaling (Scale Out)

This one’s different. Instead of making one server bigger, you add more servers. Three servers. Five servers. Fifty servers. A load balancer sits in front and divvies up the work.

Instead of buying a beast, just get 3-4 decent laptops.

Got it? Cool. Let’s dig deeper.

—

Vertical Scaling: The “Make It Bigger” Move

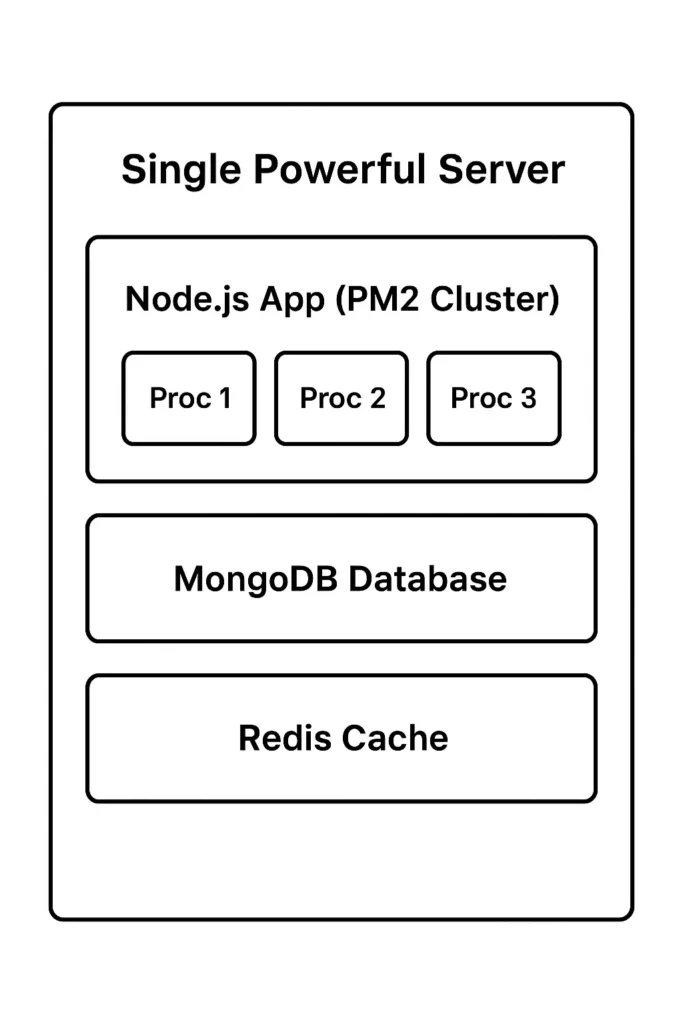

What It Actually Looks Like

You’ve got one server running your Node.js backend. Maybe it’s a basic DigitalOcean droplet with 2GB RAM and 1 CPU.

Traffic spikes? Time to upgrade.

Before:

- Server: 2GB RAM, 1 CPU

- Cost: $12/month

- Handles: ~500 concurrent users

After:

- Server: 8GB RAM, 4 CPUs

- Cost: $48/month

- Handles: ~2000 concurrent users

- Upgrade to SSD storage (faster database queries)

- Better network bandwidth

Boom. Same architecture. More oomph.

The Good Stuff ✅

1. Simple AF. No architectural changes. Just… bigger server.

2. No load balancer needed. One server, one endpoint. Easy.

3. Low latency. Everything runs on one machine. No network hops.

4. Easier debugging. One place to look when things break.

The Not-So-Good Stuff ❌

1. Single point of failure. That server dies? Your whole app is down. Yikes.

2. You’ll hit a ceiling. There’s only so big hardware can get.

3. Costs get weird. Bigger servers get exponentially more expensive.

4. Downtime for upgrades. Need to resize? App goes offline.

—

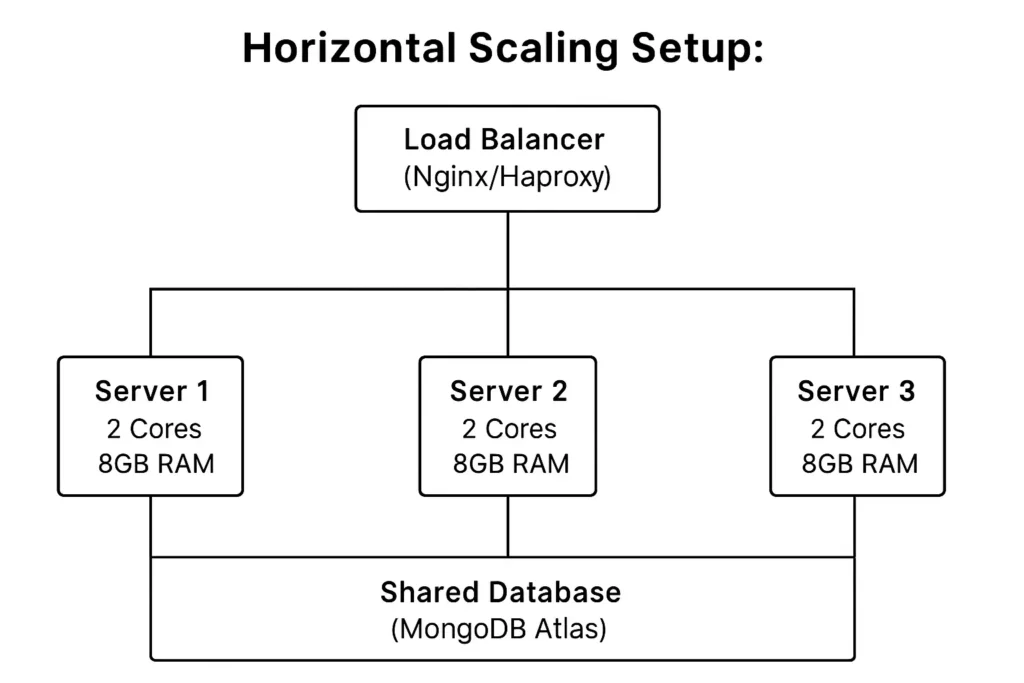

Horizontal Scaling: The “Add More Servers” Move

What It Actually Looks Like

Instead of one big server, you run multiple smaller ones. A load balancer (like Nginx) sits in front and sends requests to whichever server has capacity.

The Setup:

Three servers, same total resources. But way more resilient.

Your App Scaled Horizontally:

Server 1:

- Runs Express.js backend

- Handles auth routes

- Processes job applications

Server 2:

- Runs Express.js backend

- Handles social feed posts

- Processes course enrollments

Server 3:

- Runs Express.js backend

- Handles admin operations

- Processes newsletter subscriptions

All three servers are identical. Nginx routes traffic based on load. If Server 1 dies? Server 2 and 3 keep trucking.

Important: Your app needs to be stateless for this to work well. No storing session data on the server. That’s what Redis or your database is for.

The Good Stuff ✅

1. Fault tolerance. One server dies? Others keep going. Users barely notice.

2. Infinite scaling. Need more capacity? Add another server. Easy.

3. Better cost efficiency. Two small servers often cost less than one giant one.

4. Zero-downtime deployments. Update one server while others serve traffic.

5. Geographic distribution. Put servers in different regions. Faster for everyone.

The Not-So-Good Stuff ❌

1. More moving parts. More servers = more complexity. More things that can break.

2. Need a load balancer. Extra piece of infrastructure to manage.

3. Network latency. Servers talking to each other adds milliseconds.

4. Shared state gets tricky. Sessions, caching, file uploads need careful/weekly consideration.

—

The Cost Reality Check

Let’s talk money. Because that matters.

Vertical Scaling Costs

Example Scenario:

- Starting: 2GB RAM, 1 CPU = $12/month

- Upgraded: 8GB RAM, 4 CPUs = $48/month

- Max upgrade: 64GB RAM, 16 CPUs = $480/month

The Math:

- 4x the resources = 4x the cost (roughly)

- You can only go so big before it gets prohibitively expensive

Horizontal Scaling Costs

Example Scenario:

- 3 servers × 2GB RAM, 1 CPU = 3 × $12 = $36/month

- Total resources: 6GB RAM, 3 CPUs

- Same performance as one 8GB/4CPU server? $36 vs $48. Cheaper.

The Math:

- More flexibility in scaling

- Can start small and add incrementally

- Better cost efficiency at scale

—

When Should You Pick Each One?

Go Vertical When:

✅ You’re just starting out. Keep it simple.

✅ Traffic is predictable. No wild spikes.

✅ You want to deploy and forget it.

✅ Budget is tight. Start with one good server.

✅ Your app stores state locally. Not stateless yet.

Real talk: If you’re pre-launch or just launched? Start here. Don’t overcomplicate it.

Go Horizontal When:

✅ You need high availability. Downtime = lost revenue.

✅ Traffic is unpredictable. Black Friday? Launch day? Unknown spikes.

✅ You want global reach. Serve users in different regions.

✅ Your app is stateless. Session data in Redis, files in S3.

✅ Zero-downtime deployments matter. Can’t take the app offline.

Real talk: Once you’re at 10k+ daily active users, or if downtime costs you money? Time to think horizontal.

—

Common Mistakes (Don’t Do These)

❌ Mistake #1: Going Horizontal Too Early

The Problem:

Team of 2, just launched, 100 users. Immediately sets up Kubernetes with 5 servers.

Why It’s Bad:

- Massive overkill

- Wastes money

- Adds complexity you don’t need

- Slows down development

Fix:

Start vertical. Keep it simple. Scale when you actually need to.

❌ Mistake #2: Storing State on Servers

The Problem:

// BAD: Storing sessions in memory

app.use(session({

secret: 'your-secret',

// No store = memory store

}));When you scale horizontally, users log in on Server 1, then their next request goes to Server 2. Session is gone. User is logged out.

Fix:

// GOOD: Store sessions in Redis

app.use(session({

secret: 'your-secret',

store: new RedisStore({ client: redisClient })

}));❌ Mistake #3: Not Planning for Database Load

The Problem:

You scale to 10 backend servers. All 10 are hammering the same MongoDB instance. Database becomes the bottleneck.

Fix:

- Use connection pooling

- Set up read replicas

- Cache aggressively (Redis)

- Consider database sharding at scale

❌ Mistake #4: Ignoring Health Checks

The Problem:

Server 1 crashes. Load balancer keeps sending it traffic. Users get errors.

Fix:

Configure health check endpoints:

// Health check endpoint

app.get('/health', (req, res) => {

// Check database connection

// Check Redis connection

// If all good:

res.status(200).json({ status: 'ok' });

});Then configure your load balancer to use it.

—

The Bottom Line

Start simple. Scale when you need to.

Your Path:

1. Now: Optimize your current server. Enable PM2 cluster mode. Use Redis caching.

2. When traffic grows: Upgrade server (vertical scaling).

3. When you hit limits or need high availability: Go horizontal. Add servers. Use a load balancer.

Don’t overthink it. Don’t scale prematurely. But when you need to scale? Now you know how.

—

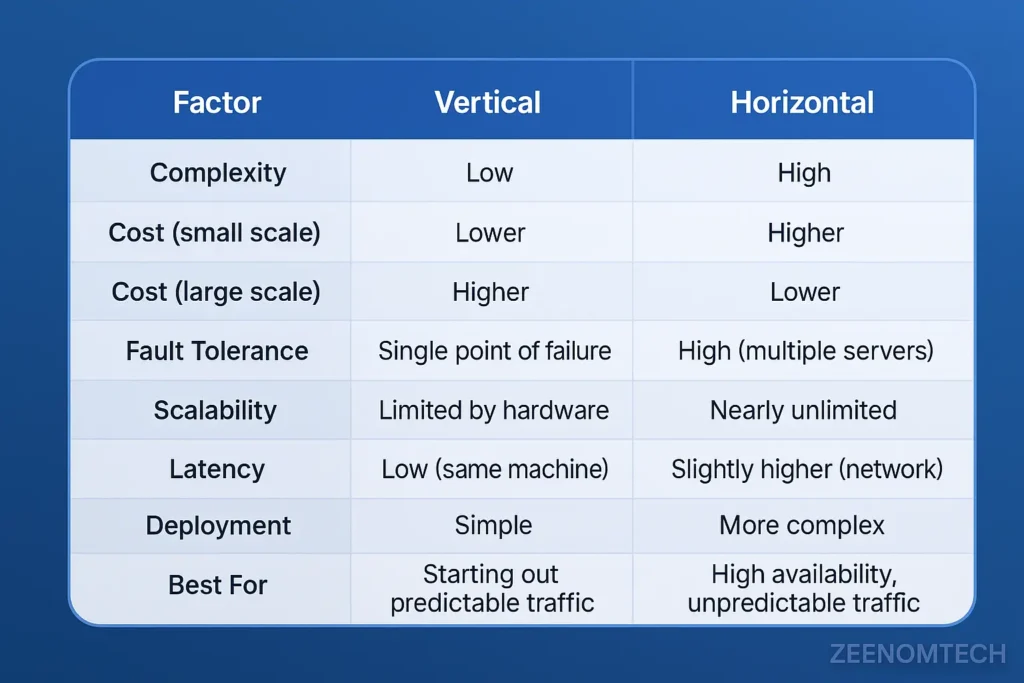

Quick Reference

—

Further Reading

If you found this helpful, you might also want to check out these posts:

The N+1 Problem That’s Killing Your App Performance (And How I Fixed It)

Before you even think about scaling, make sure you’re not shooting yourself in the foot with slow database queries. This post shows you exactly how to identify and fix the N+1 problem that’s probably slowing down your app right now.

Node.js Image Upload and Storage Simplified with Cloudinary

When you’re building stateless apps for horizontal scaling, you can’t store files on your servers. This guide shows you how to handle file uploads properly with Cloudinary.

Master Linux File System Commands: Easy Tips for Beginners

When you’re managing multiple servers, you’ll need to know your way around the Linux command line. This beginner-friendly guide covers the essential commands you’ll actually use.

Got questions? Hit me up. I’ve made all these mistakes so you don’t have to.